Threat Actors Use AI to Upgrade an Old Attack Vector…at Scale

As we usher in a new year (which somehow already feels long!), we also get to experience a lot of new & interesting security threats. You’ve probably been hearing all about how AI is going to create an endless supply of quality malware that will overwhelm us all with zero-day attacks. Frankly, that seems to be a lot more hype than reality…so far.

Interestingly, for all of the innovations in the world of cybersecurity - both offensively & defensively - the number one attack vector is not Distributed Denial of Service (DDoS) attacks, super computers cracking your encryption, automated dictionary attacks cracking your passwords, AI-authored zero-day exploits delivered through waterhole attacks, or any other innovative attack type.

The world leader in cyber-attack vectors is STILL phishing & social engineering delivered through bogus communications (email, text, social media platform messages, etc). Why? Because most people have more tasks than time & do the majority of their communication (business & personal) through these channels…& NOBODY can make people 100% vigilant & security conscious. That’s just how humanity rolls.

The most financially damaging cyber-attacks - Business Email Compromise (BEC) & ransomware - both start almost exclusively through bogus communication. While many of those messages used to be easy to identify due to poor spelling, bad grammar, or other obvious indicators, AI has significantly improved the quality of phishing emails for threat actors. Now, anyone in the world - with little to no technical knowledge or English language proficiency - can create compelling messages (including AI-generate audio & video) that will challenge the teachings in most security awareness training programs…& that brings me to what I have just started seeing recently.

An Emerging Twist on an Old Favorite

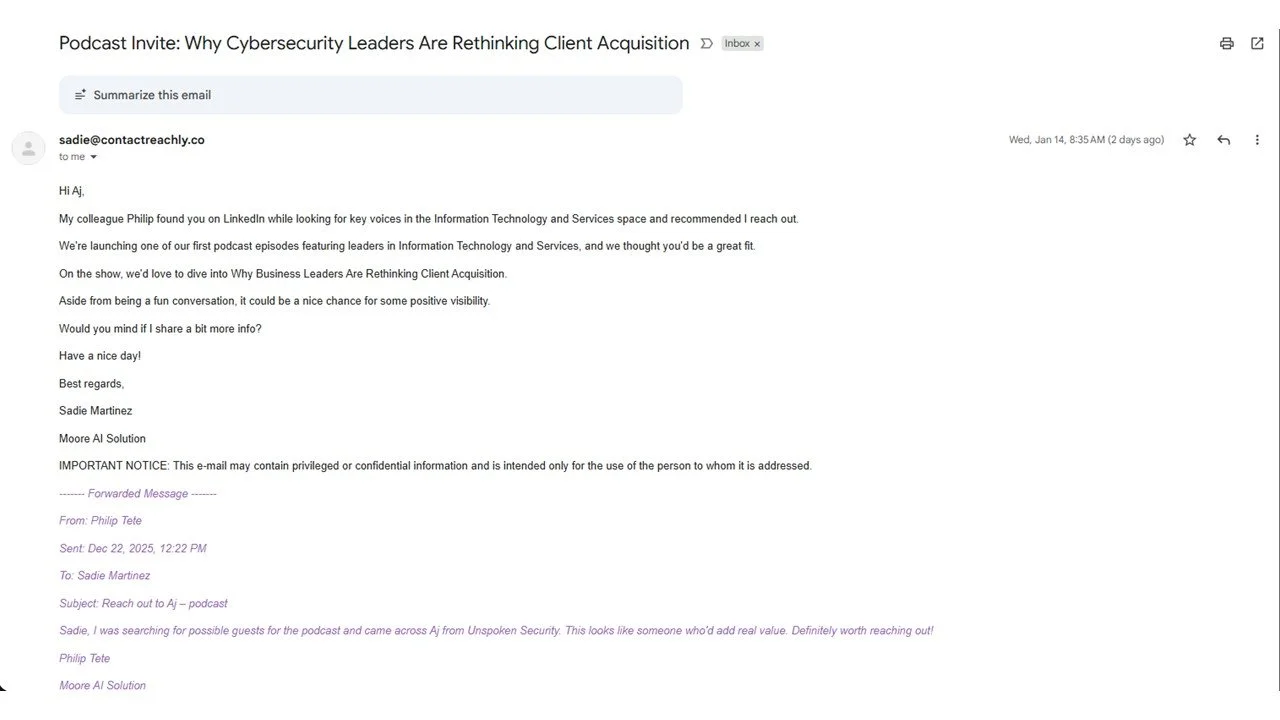

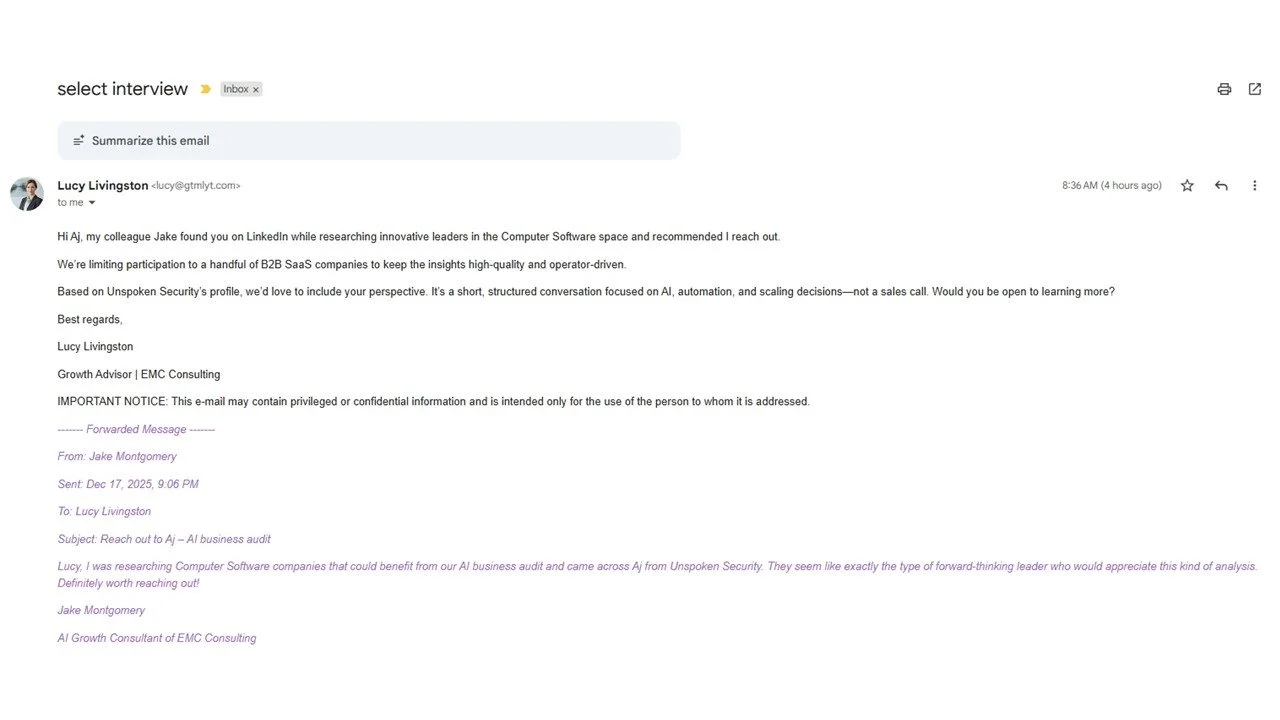

There appears to be a new trend in phishing wherein someone reaches out with an offer to do some consulting, appear as a podcast guest, or other similar opportunities tailored to appeal to a target’s specific interests. While that may sound like run-of-the-mill phishing, here is where it gets interesting: the outreach is coming from an AI-generated persona with a name, title, headshot, & email. Additionally, the outreach comes in the appearance of an email thread showing that the target was “recommended” by someone else…& THAT “person” is ALSO an entirely fictional character. You can check out real samples below that I received this week.

In each of these samples, the sender’s title aligns with a real company, but their email address does not match that organization. The same is true for the “reference” in the email thread that purportedly generated the interest in me. Nobody in these emails “came across Aj on LinkedIn” & thought I’d be great for their podcast or interview because none of these “people” are real. Most people won’t take the time to search email addresses, check corporate websites, or scour LinkedIn to validate (or disprove) a persona. Even if you have the skills & instincts, it’s a time-consuming hassle in a world where few of us have “extra” time. But I was too curious to ignore these, so I did the digging (so you don’t have to).

What is a little more concerning is that, while they didn’t do it in this case, it is not hard to go a few steps further to create fuller, deeper personas that would be harder to identify. For instance, if they created LinkedIn profiles that aligned with these personas - or created bogus websites hosting content that appeared to validate the associated information in these emails (& bogus LinkedIn profiles) - the scam becomes more believable. As those additional steps are neither difficult nor expensive, I would expect to see that expansion as an upgrade to these scams. Lucky for me, one of the easiest indicators of a bogus communication is usually the inability to render my name correctly. For all the brilliance of AI, my name (AJ or A.J.) invariably is written as “Aj” whenever automation is used. It’s a handy indicator for me that may not be near the top of anyone’s list of things to improve upon.

So, knowing that we’re beginning to see this trend of phishing in bogus email chains, I began to wonder how common is this new twist on an old entry point…& how easy is it to do? While I cannot yet answer the first question (but I’d love to hear from others who have seen this same kind of outreach), I have some thoughts on that second one. This is becoming VERY easy, which is why we should expect MUCH more of this kind of bogus communication in 2026 & beyond.

Evolution of the AI User…& the Impact on Everyone

While most people are currently using AI platforms like ChatGPT, Claude, & Gemini as nothing more than a search engine on steroids, more sophisticated users have been able to create personalized AI projects tailored towards their specific wants or needs…& those “advanced users” are actually STILL behind the curve when it comes to getting the most value & benefit from these AI platforms. The big leap forward in AI is agentic AI, defined as, “autonomous AI systems that can independently set goals, plan multi-step actions, and execute them with minimal human supervision, acting proactively rather than just reacting to commands, by reasoning, adapting, and using external tools to achieve complex objectives.” Think of this is your personal artificial workforce capable of not just helping you but actually doing the things you currently do. The most talented AI users I know created AI agents in 2025 that replaced Executive Assistants (EAs), Sales Development Representatives (SDRs), recruiters, marketers, & many other functions. I’m not referring to large corporations or teams of AI experts. I’m just talking about bright, talented, & curious individuals who have used AI to maximize their time. The same efforts, done at scale by tech giants, have created an AI revolution that we see rapidly erasing white collar jobs & threatening to eventually eliminate entire career fields…& we are likely to see an exponential acceleration of those efforts & outcomes in 2026.

Agentic AI for Hire

The next step in this movement towards agentic AI, which is now underway, is companies selling these services commercially to virtually anyone…& THIS is what leads back to the emails I am starting to receive from artificial “people.” While most people will very likely evolve from basic AI user to AI power user & eventually AI agent creator, that will be a long road for the majority. But, for those who want to get the benefits of being an AI agent creator today - while they continue to learn how to do this independently or focus on other endeavors - they can hire one of the companies that have recently launched these services commercially. For a fraction of the cost of paying minimum wage, virtually anyone can have dozens of completely artificial people specifically designed to autonomously fulfill customized roles. With the nominal additional cost of email addresses & domain names, virtually anyone can have a team of artificial “people” automatically generating & responding to phishing emails & curating scam conversations at an industrial scale. Now, when I say, “virtually anyone,” I mean it! My research indicates that the estimated monthly cost to hire a purpose-built, artificial army is less than $2,000 per month.

Now What?

The use of bogus communications to engage potential victims is, above all else, a numbers game…& threat actors are getting tools that will exponentially increase the number of high-quality phishing campaigns in 2026. The same security measures regarding phishing & social engineering threats still apply. We can still block incoming email from suspicious domains. We can still train people on what to look for & what not to click on. But, we are going to see larger volumes of increasingly high-quality phishing scams that have historically benefitted from increases in the quality & quantity of engagements with targets.

I’d say that 2026 is going to be a BIG year for phishing & social engineering…but THAT is nothing new.